AR Personal Assistant

Experimenting with AR interaction design in the workplace

TYPE: Team Project

TEAM: Rochelle Campbell, Maria Natalia Costa, Megan St. Andrew, Fan Wang, Wei Zhang

COURSE: Intro to AR/VR Application Design | UMSI

TIMESPAN: Fall 2019

PROBLEM STATEMENT: How might we design the ultimate personal assistant to better optimize the time spent in meetings?

TOOLS:

Figma

Adobe After Effects

Adobe Media Encoder

Adobe Premier Pro

Cinema 4D

iMovie

METHODS:

Sketching

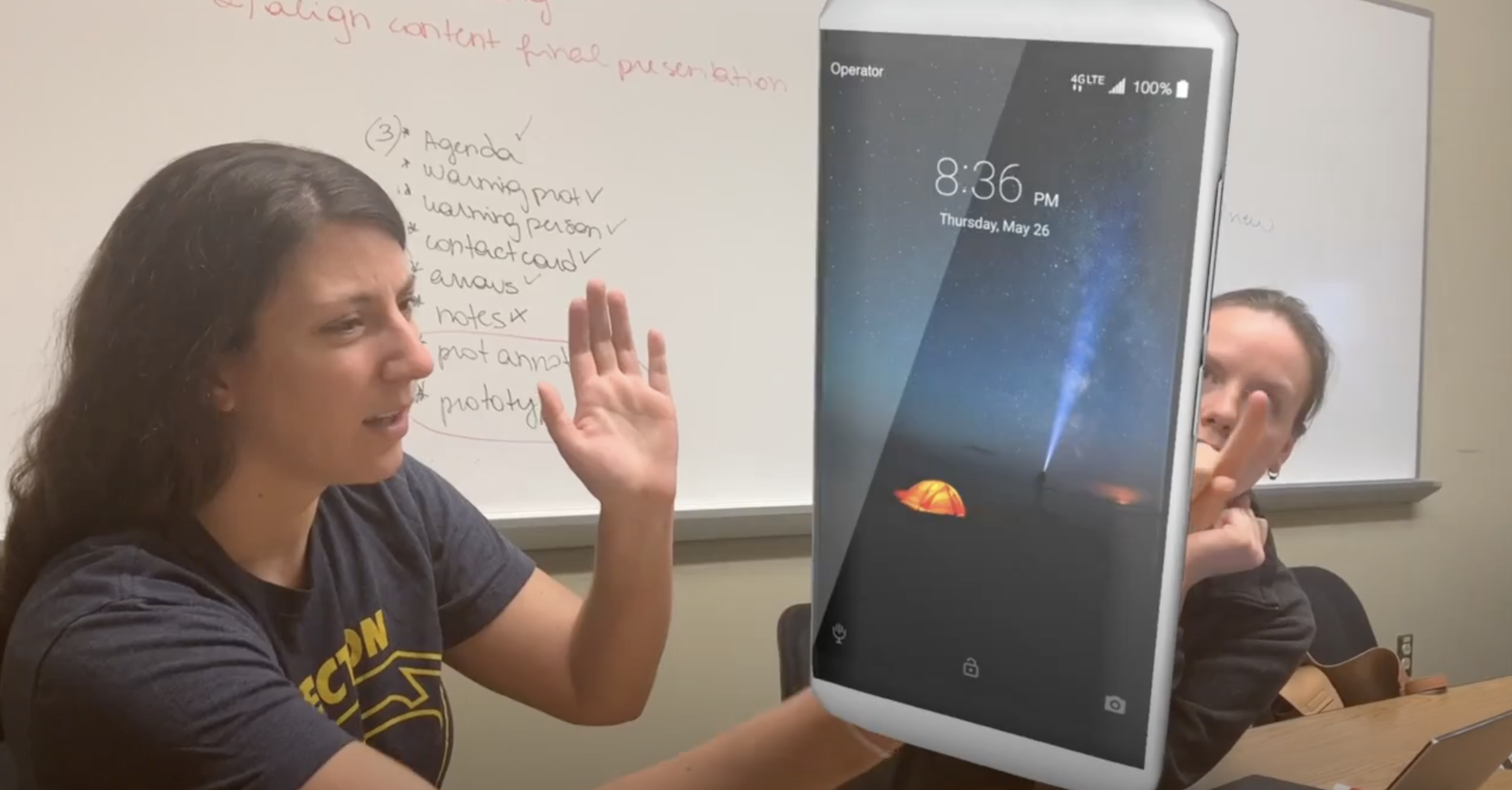

Physical prototyping

Bodystorming

What is the “ultimate personal assistant?”

Our team was originally tasked with exploring what the ultimate personal assistant would be like for AR or VR. After sketching sessions, we ultimately focused on the following problem statement:

How might we design the ultimate personal assistant to better optimize the time spent in meetings?

Project goals

Unlike other projects whose main focus is research insights and actionable next steps, the main goal of this work was to explore interaction design solutions for a wearable AR device. In other words, try stuff out, have fun, and see what works.

Solution

SOLUTION TECHNOLOGY: wearable AR device (examples: Hololens, Google glass, etc.)

Through further sketch ideation and bodystorming activities, the team focused on the following “optimize meeting time” tasks and their associated interaction designs:

Reminds us if you forgetting something for the meeting

Keeps track of the meeting agenda and notes in real time

Enables sharing of files and collaboration (as long as other participants are also wearing an AR device)

Reminds us if we need to follow up with someone at the meeting

Project takeaways

Brainstorming methodologies

Our team found that sketching was one of the more powerful brainstorming activities to get started with the main tasks we would like to focus on. In essence, necessary “pre-requirements” phase to help the team ideate and ultimately align on our main goals.

However, the most powerful methodology for designing the actual AR interactions was bodystorming with physical prototypes. Actually getting up, moving around, and inhabiting the physical space allowed us to:

Understand where digital objects should be in space with regard to the AR viewer

Test different gesture interactions (e.g. air taps, open palms, gaze, etc.)

Experiment with which interactions (voice, gesture, gaze, etc.) were most natural for a given situation

Interaction design best practices

Physical prototyping is still king. Make all digital objects as paper prototypes with your bodystorming first to quickly map out interactions, movement, and content.

Use sound to your advantage. Adding an interface onto an already chaotic world means that users need even more help noticing the things that are most important, especially when out of view.

Always consider how an interaction could be best served: voice, gesture, gaze, a mix of all? Bodystorming can help you experiment with what feels most natural.

Questions still left to explore

When is it best to use voice interaction vs. gesture interactions vs. gaze interactions?

Is the voice interaction vs. gesture interaction ultimately left to the user (e.g. should we design both voice AND gesture interactions for all interactions?)

What does this mean in terms of accessibility?

How does gaze factor into interaction design? What are the major use cases for gaze? Most of our people-based notifications were based on gaze as opposed to gesture and voice. We found that gaze is a more private kind of interaction that is far less noticeable that voice and gestures to another person.

Our solution assumed everyone also was wearing an AR device.

What does this mean for privacy?

Does this mean all workers would be required to wear an AR device while working?

What if there is a guest at the company who does not have an AR device?